How to configure aider and Continue with o3-mini and DeepSeek-R1 deployed in Azure AI Foundry

A step-by-step guide to configure aider and Continue with Azure-hosted o3-mini and DeepSeek-R1 LLMs for AI-assisted development

A step-by-step guide to configure aider and Continue with Azure-hosted o3-mini and DeepSeek-R1 LLMs for AI-assisted development

I’ve just released v0.7 of Grabit, my little command line app for saving full-text copies of webpages. It brings support for saving Reddit posts (I really wanted to do this), and custom user agents (I didn’t really want to do this, but here we are). It also prettifies the markdown, to make sure it looks just the way it should, nobody likes 10 blank rows before every bulletpoint. Using o1 to automate the boring parts One more interesting thing is that I’m experimenting with using an LLM to help me keep the README in sync with the new changes, and in general help me automate the boring parts of releasing a new version....

How to integrate smolagents with Azure OpenAI to build Python-driven AI agents. Also, lots of ducks.

How Azure OpenAI’s prompt caching feature works, its benefits, caveats, and a quick experiment

A web page downloader for humans and large language models alike

A bit of a rant on the state dependency injection in Python/FastAPI, and an implementation using the Injector and FastAPI-Injector libraries

Interesting things I’ve learned in week 2 - 8 December 2024 (apart from the fact that democracy is fragile)

Understand the differences in pricing between Azure OpenAI and OpenAI for fine-tuning AI models, with a detailed analysis of token and hosting costs.

I heard you like OpenAI, so I used OpenAI’s Whisper to transcribe the OpenAI DevDay Keynote, OpenAI GPT-4 Turbo to summarize the transcript, come up with ideas that illustrate the main points and generate DALL-E prompts for said ideas, OpenAI DALL·E 3 to generate the images, and OpenAI Text to Speech to narrate the summary. Xzibit would be like, so proud.

About Orca-2 The fine folk at Microsoft Research have recently published Orca 2, a new small large language model and apparently, it’s quite good! Just look at the test results below – on average, both the 7B and the 13B variants are significantly better than Llama-2-Chat-70B, with Orca-2-13B superseding even WizardLM-70B. Pretty cool! 🚀 I also love the idea behind it: prompting a big large language model (in our case GPT-4) to answer some rather convoluted logic questions while aided by some very specific system prompts, and then fine-tune a smaller model (Llama-2-7B and 13B respectively) on just the question and answer pairs, leaving out the detailed system prompts....

These are the slides and notebook I’ve used during my talk on how to build an Internet-connected search assistant almost from scratch. AKA Poor Man’s BingChat. First time I talked about it was at Codecamp Iasi, where it’s gotten a lot of positive feedback, plus it was awesome to share the stage with established speakers (and personal heroes of mine) like Mark Richards, Venkat Subramaniam, Eoin Woods, and Dylan Beattie. Yes, you can see them in the hero picture 😱....

Recently, I was curious to see how easy it would be to run run Llama2 on my MacBook Pro M2, given the impressive amount of memory it makes available to both CPU and GPU. This led me to the excellent llama.cpp, a project focused on running simplified versions of the Llama models on both CPU and GPU. The process felt quite straightforward except for some instability in the llama.cpp repo just as I decided to try it out, and which has been fixed in the meantime....

An experiment with prompt injecting Bing Chat – successfully changing its persona, exploring data extraction potential, limitations, and future implications.

My top 3 tips for working better, faster, and just a bit stronger with Azure ML Compute Instances

A quickstart guide to deploying machine learning models in production using Azure Machine Learning’s managed online endpoints

A guide to creating GPU compute instances on Azure ML, installing Stable Diffusion, and running AUTOMATIC1111’s Web UI.

Because life’s too short to deploy things manually

A glimpse of the upcoming paradigm shift in how we do development

The issue with machine learning pipelines is that they need to pass state from one step to another. When this works, it’s a beautiful thing to behold. When it doesn’t, well, it’s not pretty, and I think the clip below sums this up pretty well. made a Rube Goldberg machine pic.twitter.com/gWRNnmm5Ic — COLiN BURGESS (@Colinoscopy) April 30, 2020 Azure ML Pipelines are no stranger to this need for passing data between steps, so you have a variety of options at your disposal....

A story about love, loss, and caching

How to create a model based on an Azure AutoML-trained baseline, using standard open-source components where possible and adapting AutoML specific code where needed

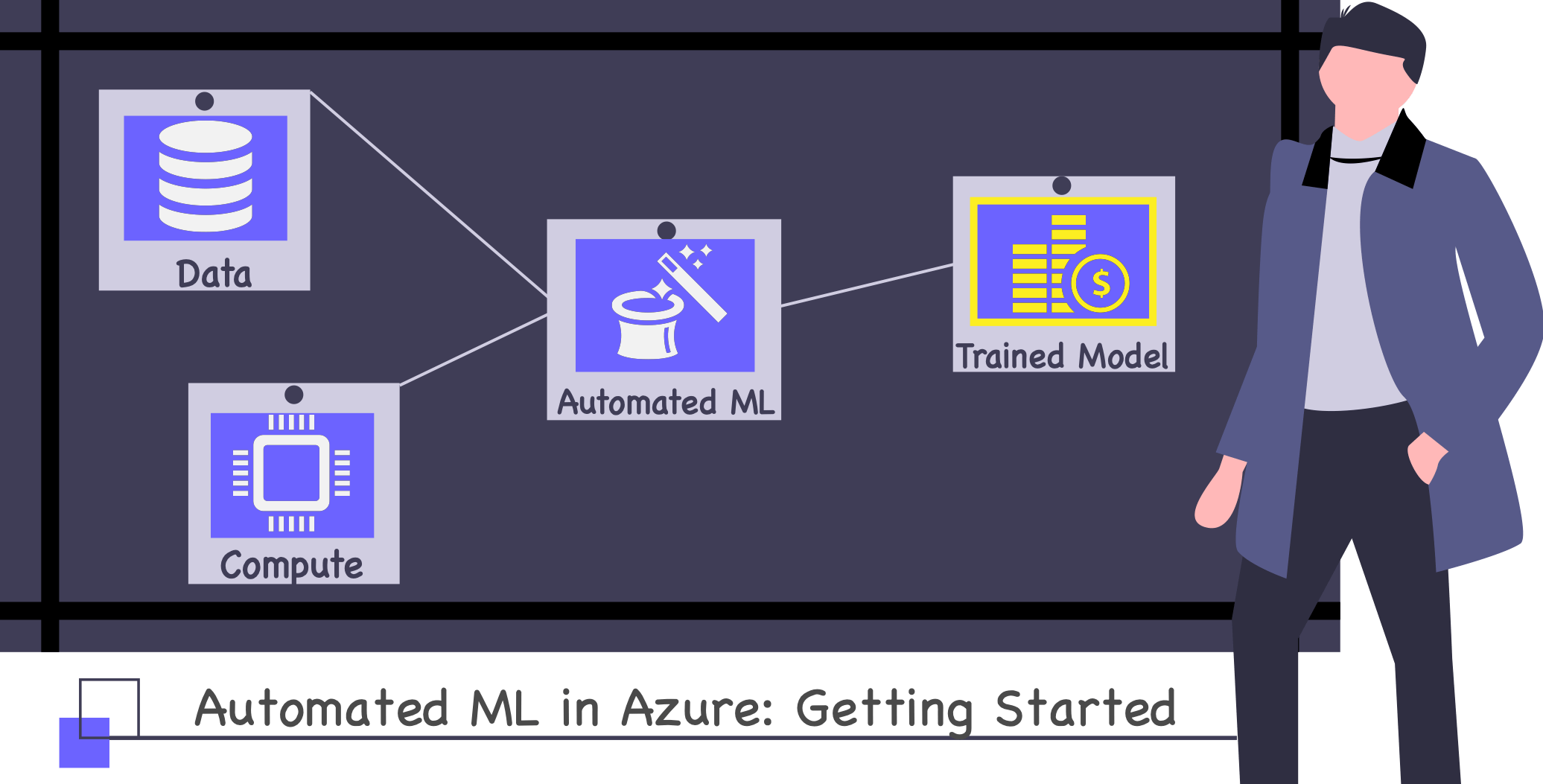

The first in a series of articles about building production machine learning systems in Azure, thinly veiled as an attempt to predict cryptocurrency prices

Machine learning pipelines are a way to describe your machine learning process as a series of steps such as data extraction and preprocessing, but also training, deploying, and running models. In this article, I’ll show you how you can use Azure ML Pipelines to deploy an already trained model such as this one, and use it to generate batch predictions multiple times a day. But before we do that, let’s understand why pipelines are so important in machine learning....

A step by step introduction to Automated Machine Learning in Azure while gathering data, creating the necessary Azure resources, and automatically training a model

A quick and dirty tutorial for migrating from Mercurial to everyone’s favorite distributed version control system, Git

Long title, I know 🤫. It used to be shorter, as some earlier versions of this talk were called ‘Predicting Survivability on the Titanic’, but this time I wanted to experiment a bit and make it real easy for the audience to decide whether or not this would be interesting for them. And so they did. You see, they wanted to learn more about machine learning. And, the way I see it, the two tools I talked about - Azure Machine Learning Studio and Kaggle Competitions - can help you get started with ML, while also making it fun to do so....

This is a more detailed version of my Boy meets Girl talk, created specially for Microsoft Ignite | The Tour Amsterdam 2019. Whereas Boy meets Girl was mostly focused on how to deploy a trained model using either Azure ML Service or ML Studio, here I wanted to create a more in-depth comparison of the two tools. This is what led me to the concept of having multiple rounds, with the audience voting for their favourite tool (truth be told, I think I just wanted another go at delivering something similar to my TypeScript versus CoffeeScript talk 🤓)....

This was a fun talk to write :). Ever since I saw Azure ML Service being announced, I knew I wanted to compare it with ML Studio, a tool with which I had a bit more experience. And so I did. Since 45 minutes is nowhere near enough to compare the two tools (lesson re-learned the hard way while designing Service versus Studio), I decided to only compare their deployment capabilities, given an already trained model....